Crawl Budget Optimization: SEO Best Practices for 2025

Optimize your crawl budget in 2025 to fix indexing issues, improve Googlebot efficiency, and boost your site’s visibility with smart SEO strategies.

Summary:

Want to make sure Google is indexing the right pages on your website? That’s where crawl budget optimization comes in. In 2025, with websites growing in size and complexity, wasting a crawl budget on irrelevant or duplicate pages can slow down indexing and reduce your site’s visibility in search results.

In this blog post, we’ll break down exactly how to optimize the crawl budget, what impacts it, and the technical steps you can take to help Googlebot focus on what really matters—your most valuable content.

What is Crawl Budget?

Crawl budget refers to the number of pages a search engine bot (like Googlebot) is willing and able to crawl on your site within a given time. It’s influenced by two main factors:

Crawl Rate Limit – How many requests Googlebot makes per second.

Crawl Demand – How often and how deeply your site needs to be crawled.

If you’ve noticed delays in indexing or random pages showing up instead of your key content, your crawl budget might be misused.

Understanding Googlebot Crawl Behavior

Googlebot prioritizes pages based on popularity, freshness, and internal linking. It’s designed to be efficient—but it’s not perfect. Unoptimized sites with bloated structures, slow loading times, or misconfigured robots.txt files often face crawl inefficiencies.

Signs of crawl issues:

Important pages not getting indexed

Crawling non-essential or duplicate pages

Unnecessary crawl on filters, archives, or thin content

Common Indexing Issues That Waste Crawl Budget

Before optimizing crawl behavior, identify common crawl budget wasters:

Duplicate URLs (e.g., with tracking parameters or session IDs)

Soft 404s or broken links

Infinite loops in calendar pages or faceted navigation

Unnecessary redirects or redirect chains

Blocked but linked resources in robots.txt

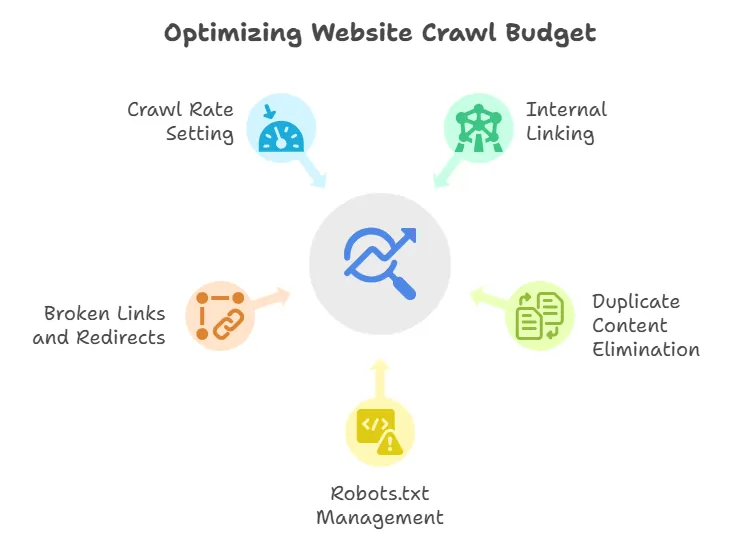

How to Optimize Crawl Budget in 2025

Optimizing your crawl budget ensures that Google focuses on your most valuable content. Here’s how to do it effectively:

1) Improve Internal Linking and Site Structure

Use a clear, logical hierarchy.

Ensure every important page is linked internally.

Keep the number of clicks from the homepage to important pages minimal.

2) Eliminate Duplicate Content

Use canonical tags where appropriate.

Consolidate similar content or outdated pages.

3) Use Robots.txt Wisely

Block low-value pages like admin, tag pages, or search results.

Don’t block resources (like CSS/JS) that are essential for rendering.

4) Fix Broken Links and Redirect Loops

Regularly audit for 404 errors.

Simplify redirect chains to no more than 1 hop where possible.

5) Set Crawl Rate in Google Search Console ( If needed )

If your server is overloaded, reduce the crawl rate temporarily.

Otherwise, let Googlebot decide—manual settings are rarely needed today.

Server Log Analysis: Your Secret Weapon

Server log files give a real-time view of how bots interact with your site. They help you answer:

What pages are crawled most often?

Is Googlebot wasting time on irrelevant pages?

Are important pages being ignored?

Using tools like Screaming Frog Log Analyzer, you can spot crawl budget leaks and fix them efficiently.

Blocked Resources in Robots.txt

Blocking essential resources like JavaScript or CSS in robots.txt can prevent proper rendering of your site. This might not just waste the crawl budget–it can hurt rankings.

Tips:

Don’t block JS/CSS unless you’re sure it’s unnecessary.

Test your robots.txt in Google Search Console’s “URL Inspection” tool.

Prioritize High-Value Pages

Focus Googlebot’s attention where it matters:

Product and category pages

Blog posts driving traffic

High-converting landing pages

Remove or noindex thin, low-value pages like outdated press releases, tag pages, or duplicate archives.

Crawl Budget Tips for Large Websites

Large sites with thousands of URLs need advanced strategies:

Generate and submit XML sitemaps regularly.

Use hreflang correctly for international sites.

Monitor crawl stats in Google Search Console monthly.

Consider dynamic rendering if your site relies heavily on JavaScript.

Tools to Monitor and Manage Crawl Budget

Use these tools to stay on top of the crawl efficiency:

Google Search Console – Crawl stats, indexing issues

Screaming Frog – Crawl simulation, canonical checks

Ahrefs or SEMrush – Identify crawl inefficiencies and SEO health

JetOctopus / OnCrawl – Deep log file analysis (great for enterprise)

Conclusion

As websites continue to grow in size and complexity, crawl budget optimization is no longer optional—it’s essential. If search engines are wasting time on low-value or duplicate pages, your most important content could be left behind in the crawl queue. That means slower indexing, reduced visibility, and missed SEO opportunities.

By understanding how Googlebot behaves, fixing common indexing issues, and prioritizing high-value pages, you can guide crawlers efficiently through your site. Whether you’re managing a small blog or a massive eCommerce platform, regularly analyzing server logs, cleaning up your site structure, and using tools like Google Search Console and Screaming Frog can help you stay ahead.

In 2025 and beyond, smart SEO isn’t just about creating great content—it’s about making sure Google sees it.